Running an AI on one’s own computer is pretty interesting to me. The experience is reminding me a lot of distro-hopping I experienced in mid-2000s. You’re not committed to any one, only the one that works best on the hardware you have.

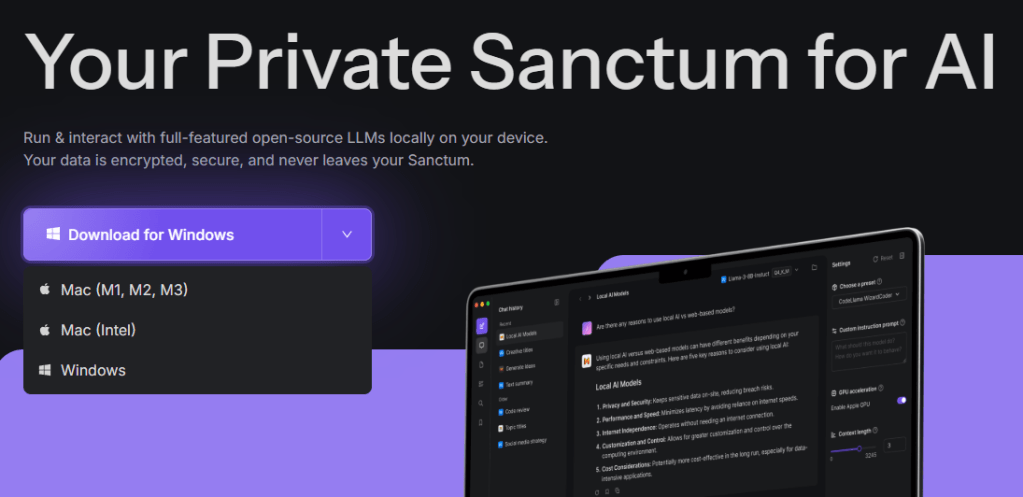

As you may recall if you’ve been reading this blog, I had fun with LM Studio, but thought I’d give Sanctum a try after reading this ZD net article by Jack Wallen. His article seems a bit more focused on privacy.

Here’s a one paragraph summary of the article via SanctumAI hosted models:

Llama’s version: Sanctum is a locally installed AI app that offers users a high degree of flexibility and customization. By running queries on a local machine, users can avoid sending sensitive data to third-party servers, ensuring their privacy and security. Overall, Sanctum is an excellent choice for users who value their privacy and want a secure way to conduct AI research.

Mistral-7B-Instruct-v0.3: You have tried Sanctum’s local AI app, which is designed to keep your data private. This locally installed AI tool can assist you with all (or some) of your research, without sending any of your queries to a third party. The app’s encryption ensures that your data remains secure and never leaves the app.

Not bad, eh? The Mistral version is a little off, supposing the person who submitted the article is the author. As a comparison, consider this summary by Mistral AI Personal, which I found to simply fly:

The article discusses the author’s experience with Sanctum’s local AI app, highlighting its privacy features. Unlike cloud-based AI, Sanctum keeps data encrypted and secure within the app, ensuring queries and responses remain private. The app is praised for its open-source nature, support for various AI models, and ability to function offline. The author also provides a step-by-step guide on installing and setting up Sanctum on macOS, emphasizing the importance of privacy settings and model selection.

Installation

I found installation of Sanctum AI to be a little bit of a pain. The initial install appeared to go well, but then I found myself installing DLLs that had not been added. While not too difficult, LM Studio had it beat.

This security component will be of some comfort to people:

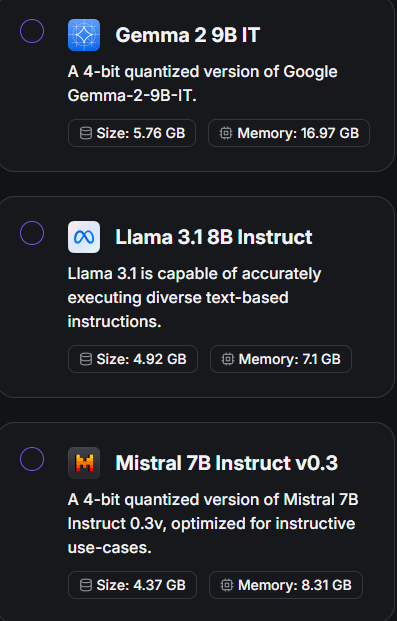

And these are the models that pop up in Sanctum AI as available to get…I grabbed the latter two:

Overall, I’m liking Sanctum AI a bit more than LM Studio, and will dump LM Studio later since I’ve noticed it reminds me a bit of Claude Pro…you know, it stops when it runs out of tokens pretty quickly, which is annoying.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.