After watching DeepSeek misinterpret my model instructions on Msty local AI tool, I dumped it. Who wants to have endless conversations with their device about how to do something? Llama showed slightly better results, Phi 4 better but slower on my machine. Phi 4 has nice results on the same prompt I used for others:

After loading several LLMs via Msty to find the one that worked best with my megaprompt, then deleting failures, Mistral was a happy discovery. It also seems to work fine on LM Studio and Msty, but to be honest, the results just aren’t up to ChatGPT on the web. A bit of a disappointment, but c’est la vie.

Mistral’s summary of a PDF:

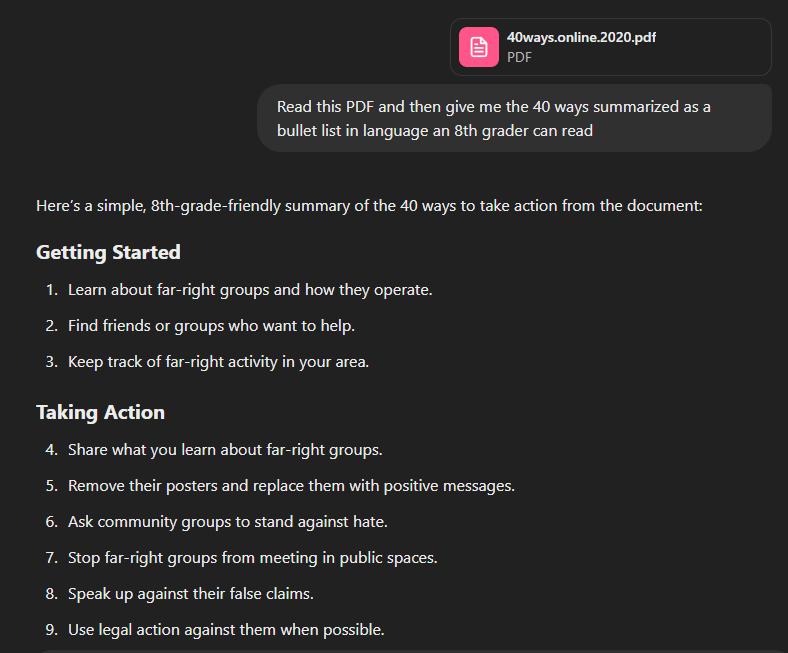

And here is ChatGPT’s:

That’s all. Just a quick update on what works.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.