This piece by Ethan Mollick, The Cybernetic Teammate, could have profound implications for workplaces.

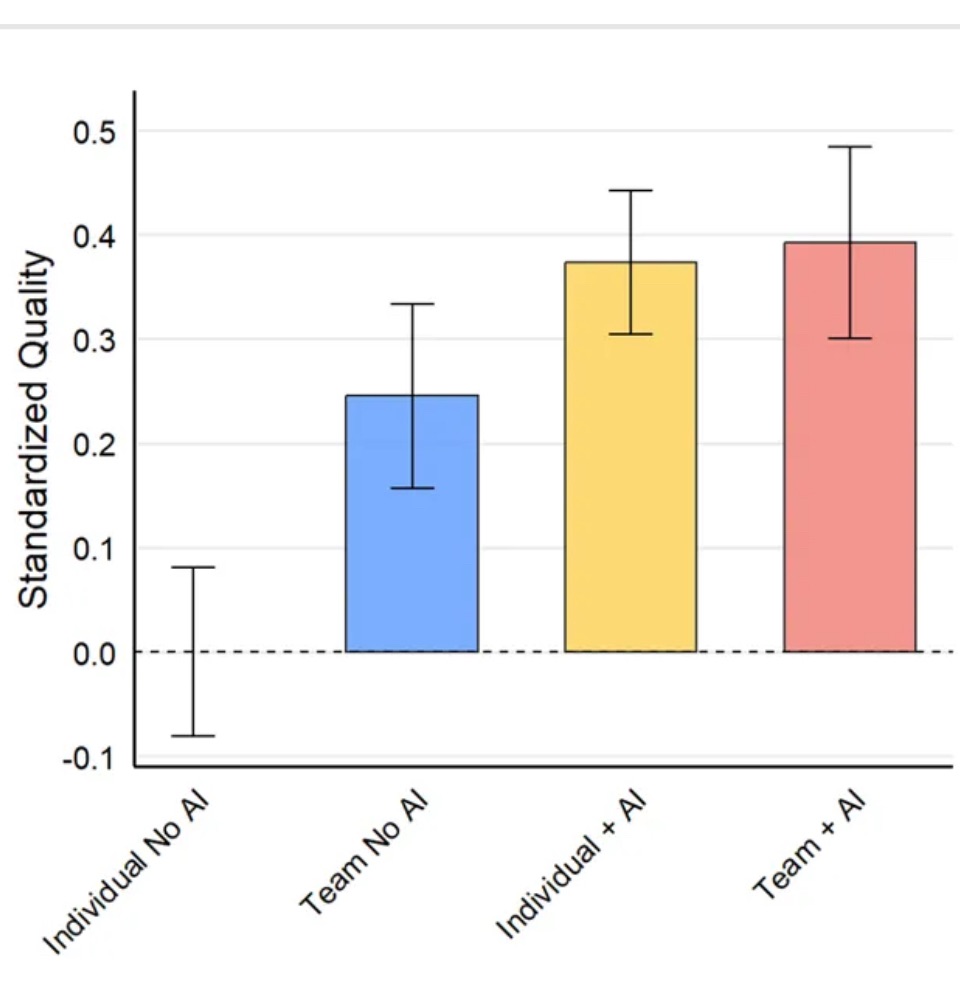

Mollick shares this graph:

Consider some of the key ideas:

- Teams outperformed individuals by 0.24 standard deviations without AI.

- Individuals with AI matched the performance of teams without AI.

- Less experienced employees with AI performed comparably to teams with experienced members.

- AI helped bridge functional knowledge gaps and elevated performance.

- AI can function like a teammate, enhancing performance and expertise sharing.

- Future work involves reimagining teamwork and management structures.

- Organizations need new thinking beyond technological solutions.

As someone who uses AI daily in his work, being aware of the capabilities of various AI tools helps me get things done. I tend to agree with others’ observations that AI isn’t always a time-saver. But it IS a thought partner, or as Mollick et al’s research suggests, a teammate.

It does make me wonder how school organizations will use AI in this way, individually or in teams, to solve problems. I also ask myself, “How well does this work in situations where work processes are not so transparent or well-documented?”

For example, when using AI, I find myself charting a new path , trying to figure things out. My efforts to create a custom GPT only come after I have trod the path many times, which is time-consuming. It is a process of doing and reflecting, then translating that into prompts and customizing results.

Of course, that happens anyway…but AI makes rapid prototyping and testing faster and easier. There some profound implications for workplaces.

Overshadowing all the positives in the study is the real fear of job displacement, use of AI without scruples built-in, and deployment of AI by ruthless oligarchs.

Consider this point from a collection of articles about AI:

…success lies not in wholesale adoption or rejection of AI, but in thoughtful implementation that prioritizes educational values, equity, and human relationships.

The future of AI in education will be determined not by the technology itself, but by how we choose to shape and use it in service of learning. (Source)

That captures the attitude towards all ed tech, no? I bet some are still looking for a metaphorical sabot to toss and slow things down or derail the effort.

After all, recent news about raiding the US confidential data of citizens to feed the AI Beast is more about bad actors profiting from people’s data than the people doing so. The human track record does not inspire trust.

While Mollick’s work is positive, it must be considered via political, business lenses that threaten the wellbeing and welfare of all. At what point is this no longer the way we do things as the human race?

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.