In one of his missives to followers, Steve Hargadon writes something my memory assigned to Audrey Watters (incorrectly I noticed when I went back to check the source). I should have known better.

Note: I can see some readers rolling their eyes at “philosophy-inspired” response. I remind you, this is an exercise in fun, not a serious treatise. For that, go read OLDaily.

Steve’s angle had elements of positivity, while Watters’ brilliant analysis often eviscerates Ed Tech (great work, Audrey!) in schools, including AI. I thought it might be Neil’s More work for teacher? The ironies of GenAI as a labour-saving technology that I skimmed in the wee hours of the morn and sent to my work colleagues. But it wasn’t.

Old Temptations Beckon

I love how Steve’s angle summons old temptations, habits ingrained in education and ed tech lovers, like a good person cozying up to a familiar:

We’ve been here before. We keep expecting big technology breakthroughs to “revolutionize education,” and now it’s AI. Once or twice a decade, a new tool promises to crack open the system and fulfill our “better angels’” dream of having education help fulfill every child’s potential. Every time (me included), we are tempted to buy the hype—only to watch it fade into the same old grind. AI’s the latest contender, and the buzz is there. (source)

It’s easy to be cynical and bitter about the failure of technology. The question I have is, “Why do we keep looking to technology to make a big difference or change that results in transformative change?” That is, why do we expect it to make things better?

Some say that new technology gets layered on to the old, hyped not because it’s REALLY going to make things better, but because it’s going to make a lot of people money. That’s why change happens, not because we are working towards better, but generating new sources of revenue. The hype, etc. is a natural part of that.

How Schools Fail…Again?

Gee, that is cynical. New tech, new hype is designed to make the people pushing it money. Big Tech spends money to make money. AGI is going to make someone money, even if it turns out we need people to do the work anyways. For a short time, people will lose their jobs and businesses won’t have to pay for sick leave. Instead, they’ll spend money on web servers and services, get tax breaks for being “new and innovative,” while regular people trying to make a living have to figure out how to make do with less.

In the end, Hargadon’s message isn’t about AI. It’s about how bad schools are, how they fail our children, and how we lie to ourselves about what they do.

“You can’t have your cake and eat it too.”

I really like how Steve contrasts generative and agentic in his piece, but in the end, they are simply another way of embracing a hybrid model. We want a system that combines AI probabilistic and deterministic models, but some might see them as mutually exclusive. We want strict assessments that grade everyone the same but also one that adjust grades on factors specific to the student. We can imagine both existing in place, but consistent implementation is destined for failure (because people aren’t machines).

And, those models fall short even as they serve as a nice analogy for schools. He writes:

Generative teaching and agentic learning could be our compass, but only if we grab the wheel, drop the hype, start realizing the harm our current school model does to most kids, and give the next generations something better. The story we tell about schools is a lie—a comforting one that keeps the machinery going—but a lie nevertheless. (source)

This is like having two compasses, but each tells you to go in the opposite direction. You end up frozen in place. At some point, you have to make the call and someone isn’t going to like the results.

Philosophy as a Solution

Human beings who are fundamentally flawed, unable to escape their programming towards selfishness, cruelty, self-deception, and social irresponsibility. You know, this reminds me of something that explains why Hargadon may be seeking to install dueling AI models as a compass to consult rather than rely on school to establish socially approved rituals of behavior.

Consider Xunzi’s, a Chinese philosopher, perspective:

Human nature lacks an innate moral compass, and left to itself falls into contention and disorder, which is why Xunzi characterizes human nature as bad. Ritual is thus an integral part of a stable society. He focused on humanity’s part in creating the roles and practices of an orderly society, and gave a much smaller role to Heaven or Nature as a source of order or morality than most other thinkers of the time. (source)

More on Xunzi

I find myself fascinated by Xunzi, whom I never read or heard about in school and only found thanks to AI analysis of a draft of my writing, and its relevance to our AI moment. Xunzi’s concern…

…is what people should do, and anything that might confuse or detract from that is a waste of time. We know that Nature is invariable, and we know the Way [The primary attraction of the Star Wars The Mandalorian society, right?] to get what we need from Nature to live, and that is all we need to know.

If AI (or ed tech or whatever) distracts from what children or adults need to do or learn in the classroom, then it’s a “waste of time.” The important thing is figuring out what that “need to do or learn in the classroom.”

And, by all accounts, we already know that. We know students need to be critical thinkers (they are not taught that well or at all), core content knowledge that is accurate to facts and evidence, and how to use that to effect. All else is really a waste of time and money.

Could AI help us find the way, a path that does away with the uncertainty by embracing it?

AI in the Classroom

When we think of AI in the classroom, ethical considerations need to be at the forefront. Since humans made AI, trained it on our work, bias and fairness are to be expected. Teachers have to learn to identify potential bias, ensure equitable outcomes, and align AI use with ethical principles and educational goals. That can be difficult when you consider that some consider the use of AI as unethical from the start. Transparency about data collection, training, safeguarding data is also essential.

Maybe if there was a model that helped…let me ask and see what AI can come up with:

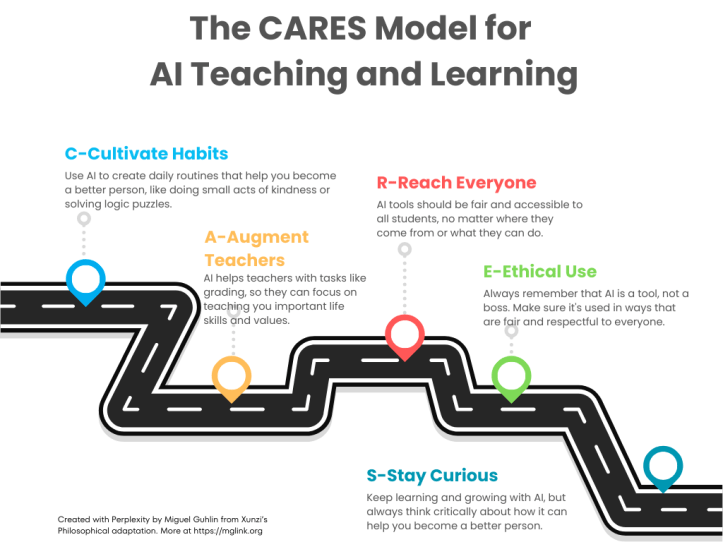

- C – Cultivate Habits: Use AI to create daily routines that help you become a better person, like doing small acts of kindness or solving logic puzzles. Helps you build good habits that make you a better friend and community member. For students, use AI to remind you to do something kind each day.

- A – Augment Teachers: AI helps teachers with tasks like grading, so they can focus on teaching you important life skills and values. Ensures teachers have more time to guide you personally. For students, ask your teacher how AI can help them teach you better.

- R – Reach Everyone: AI tools should be fair and accessible to all students, no matter where they come from or what they can do. Makes sure everyone has equal opportunities to learn and grow. For students, help others by using AI tools to make learning more accessible for everyone.

- E – Ethical Use: Always remember that AI is a tool, not a boss. Make sure it’s used in ways that are fair and respectful to everyone. Keeps AI from being used unfairly or in ways that hurt people. Always check if AI is being used in a way that’s fair to everyone.

- S – Stay Curious: Keep learning and growing with AI, but always think critically about how it can help you become a better person. Encourages you to keep exploring and learning new things. Stay curious about how AI can help you grow as a person.

That’s a “Xunzian” approach to AI in education created with Perplexity. Thanks for coming along on this thought exploration with apologies to Steve Hargadon, Xunzi, and the AI resisters out there who loathe the thought of AI being used at all.

Wait, Wait

For fun, I wondered, what might this look like from Bertrand Russell’s perspective? That’s another blog entry. 😉

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.