I ran across Bill Ferriter’s post on LinkedIn, quoted below, and decided it needed a response.

The Problem

Educators using AI are setting up a situation where they have access to AI tools, but students do not. Some see this as potentially hypocritical or two-faced. Why do educators get access to AI tools that make their work easier, but students are prohibited from access?

For me, students need to develop AI discourse competence. I explored this in another blog entry in more detail, which quotes the following:

“If you use GenAI for all your assignments, you may get your work done quickly, but you didn’t learn at all,” Wells said in a statement. “What’s your value as a graduate if you just off-loaded all your intellectual work to a machine?” https://www.byteseu.com/1082444/

It’s obvious that teachers aren’t required to “learn” their content or subject when preparing lesson plans, designing entry/exit tickets, or crafting assignments. Rather, they are leveraging that learning to get that teacher stuff done more quickly.

What we need isn’t AI literacy per se, but rather, Gen AI discourse competence. And students need it as well. That’s defined as:

…the ability to confidently discuss generative AI’s applications, limitations, and ethical considerations within your field.

It means knowing when and how to use GenAI, recognizing its impact, and engaging in thoughtful conversations about its responsible use.

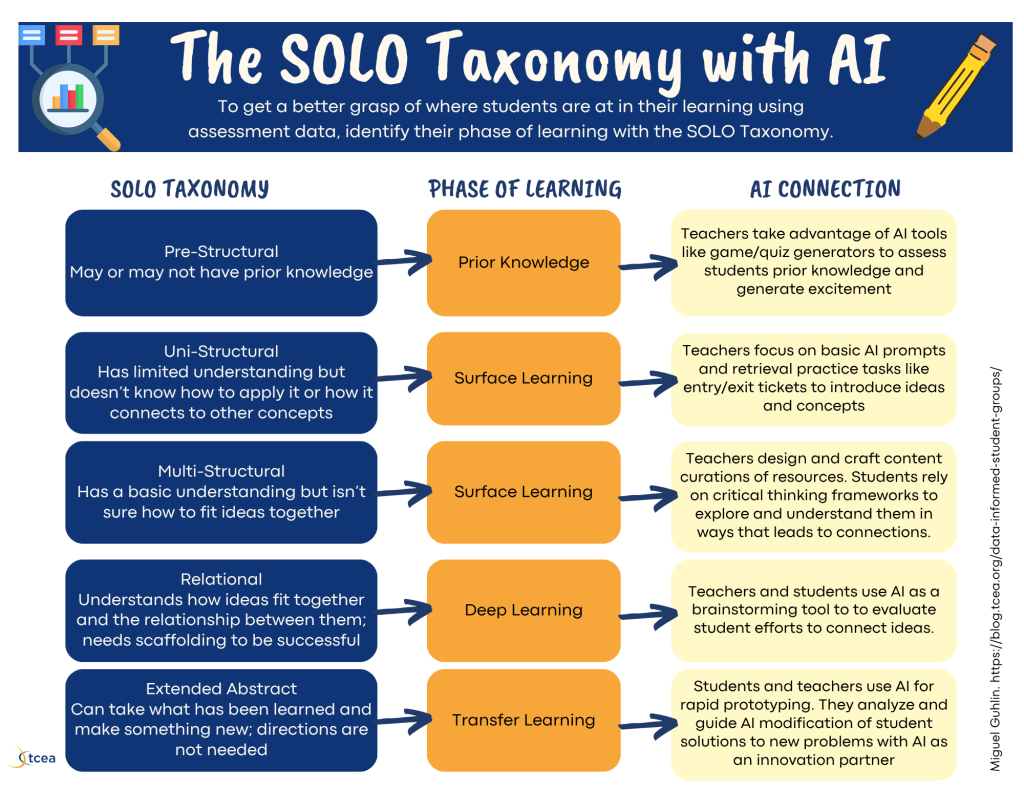

Most people who look at GenAI in education take a cognitive biased approach of black or white, yes or no, but GenAI use requires a more nuanced approach. That’s why I encourage the use of the SOLO Taxonomy as a way to decide WHEN and HOW to use GenAI in the classroom.

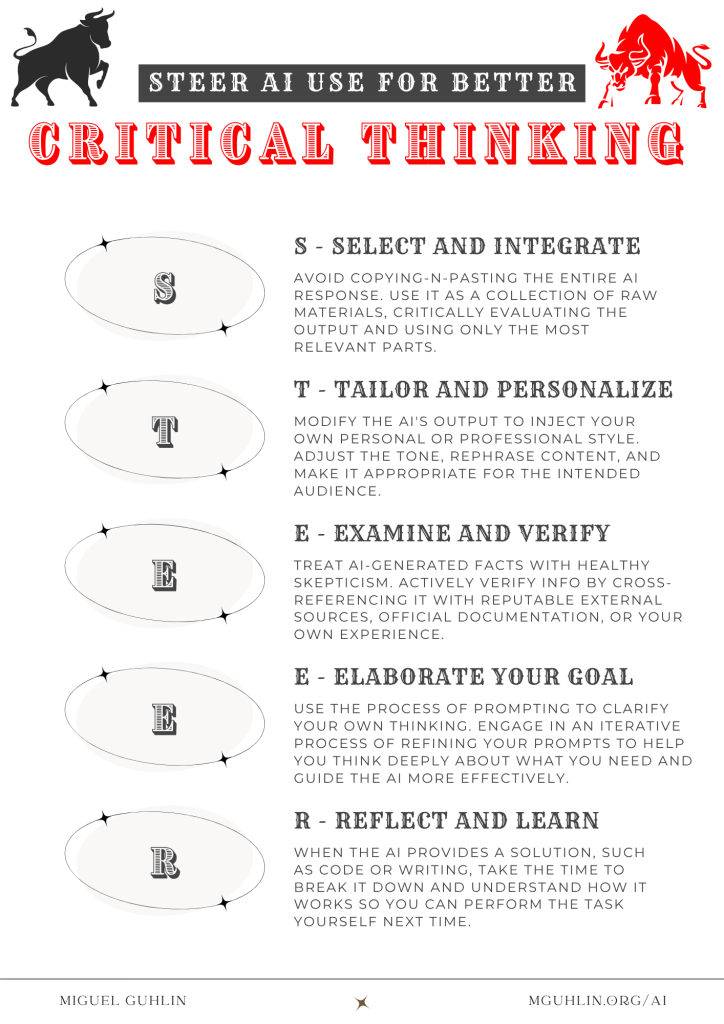

One approach that is worth exploring is the STEER AI Use for Better Critical Thinking:

Ok, there are a million similar frameworks out there. The question is, “How many are finding their way into policy and/or guard rails that schools are using?”

Bill’s Post

My daughter’s school has a fairly strict policy aimed at detecting students who use AI to complete assignments.

In addition to using popular AI detection tools, they also examine revision histories on documents and timestamps to assess the likelihood that a student could complete the writing submitted within the timeframe indicated by the timestamps and revision histories.

They have successfully scared my kid into never even considering using AI on assignments. She’s convinced that if she does, she will be given a zero and written up for violating the school’s academic integrity policy — which, she’s been told, will be on her permanent record and could prevent her from getting into college.

So, I ran a recent email from a staff member through an AI detector just now.

Turns out that 72% of it was likely generated by AI.

Does that bother me?

Nope.

Not only are AI detectors notoriously inaccurate and unreliable — research shows that they correctly identify content as generated by AI somewhere between 4 and 6 times out of ten — but using AI to speed up things like drafting frees you to think more deeply or to invest that time in more meaningful work. If this school employee uses the time saved responding to me to make some of the changes that my child needs to succeed in school, that’s a win-win.

But what bugs me is that the school has made it incredibly clear to students that AI “is cheating” and that if they are caught using it in any way at all, “there will be consequences.”

If that’s going to be the stance we take with students, shouldn’t we take that same stance with ourselves?

Why is it that we are using AI tools to facilitate our work while simultaneously suggesting to students that integrating AI support into their workflows is evidence of a character flaw or a lack of academic integrity?

And wouldn’t it be better to show students ways that AI can be used as an assistant to support learning? After all, that’s becoming a part of our workflow. If a part of “preparing students” is getting them ready for the world of work — which should very much be a part of our goals in high school — wouldn’t that be a more responsible approach for us to take?

Source: Bill Ferriter via LinkedIn

My Response

Thanks for sharing your perspective, Bill. May I use some of the points you mention as a way to explore my own reaction/thoughts about this topic?

- AI Detection Tools. Your daughter’s school appears to be employing them as the boogeyman, a way to scare children straight. These tools, however, are proven to fail. Even your own use of them to show that the school staff is relying on AI is really no proof at all that they relied on AI. Of course, you acknowledge this point.

- AI is NOT to be used. You make the point that it bugs you that staff rely on AI, while children are prohibited. It’s as if there is a dual standard being applied. Adults are using something children are not permitted. The truth is, of course, that there are many tools and technologies that adults are permitted that children are denied. They are denied this because it is their best interest to NOT use those tools or take advantage of the shortcuts.

We know that children can’t access unfiltered internet, use personal electronic devices, gain physical access to certain areas, leave campus whenever they want, consume a wider range of food and/or drinks, exhibit veto power over classroom resources, control the physical environment, and many more outside the classroom areas that are prohibited.

Simply, it does not make sense to put adult educators at the same level of AI usage as children. No doubt, you are familiar with the critical necessity of productive struggle for human brains. Even adults suffer the effects if someone does their thinking for them for them. This makes it all the more essential students be denied AI access at the appropriate moments, and that access be tightly scaffolded (e.g. AI in the Writing Workshop is a book that describes the authors’ experiences in doing this).

- Unprepared for Work. The argument that “students are unprepared for work” if they haven’t used AI is unconvincing. It is unconvincing because I use AI in my work, and most important, is the need for students to learn how to think in a critical manner, to build sufficient long-term information retention to be able to use Generative AI in a way that is useful.

Finally, I agree that finding a way to scaffold AI use with students that doesn’t shortcut learning and critical thinking is essential. Many are struggling to find exactly HOW to do that in their situations. The easiest approach for schools, of course, is to engage in “black or white, yes or no” thinking. This approach is what is problematic and due to undergo rapid change as people learn more. Thanks for reading and I offer these thoughts as imperfect and incomplete.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.