In a not so surprising twist, I decided to drop other GUI platforms for interacting with local AI solutions. Two things became clear: I needed to optimize for speed and complicated solutions weren’t going to work for me.

Why, you may be wondering at my folly in forsaking Msty, LM Studio, etc. type solutions? Even though Ollama set up with Open Web UI isn’t exactly obvious, how-to tutorials abound.

If I had a complaint is that there is no persistence from one run to the next. That is, when I shut down my machine and log back in, any knowledge stacks, tools, or models I’ve added via OpenWebUI disappear. Of course, the problem was my fault, not Open Web UI’s.

To resolve it, I realized I had to back up a bit and start over with my installation. Originally, I had installed using Python instead of relying on Docker due to a failed Windows Subsystem for Linux (WSL) update. So, I had to take some steps to get an optimal install of Open Web UI.

Note: I briefly considered trying one of these other alternatives to a GUI for Ollama, but decided to stick with Open Web UI for now. But let’s see if that holds true (spoiler alert: Nope!) as I go through installation.

Installing Docker Desktop for Personal

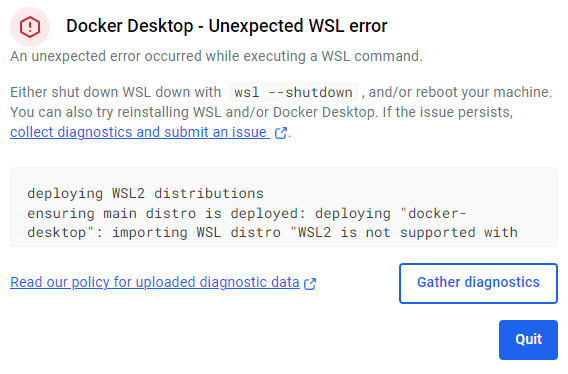

What a pain. I keep getting the Docker Desktop – Unexpected WSL error when attempting to get things going:

After opening PowerShell as an admin, install WSL and Ubuntu, I got a message that virtualization was NOT enabled in the BIOS. The error has persisted and upon reflection, I decided, “Sorry, Open Web UI, too much trouble.” You have to admit, modifying the BIOS on startup isn’t a beginner kind of activity, and who the heck wants to go through all that? Sheesh.

So back to looking at that list of Ollama GUIs that don’t require making changes to my BIOS. I decided to give Page Assist a try since the author of the article seemed to like it.

Ollama GUI: Page Assist

The author of this article appears to have recommended Page Assist (scroll down to see other extensions for Firefox). You don’t have to mess with your BIOS apparently and it’s a Chrome/Firefox browser extension. I dropped the Chrome version into my Brave browser:

Wow, this sure seems a heck of a lot easier than Open Web UI. I was surprised at the features (easy web search, Knowledge Stacks, OpenAI API access).

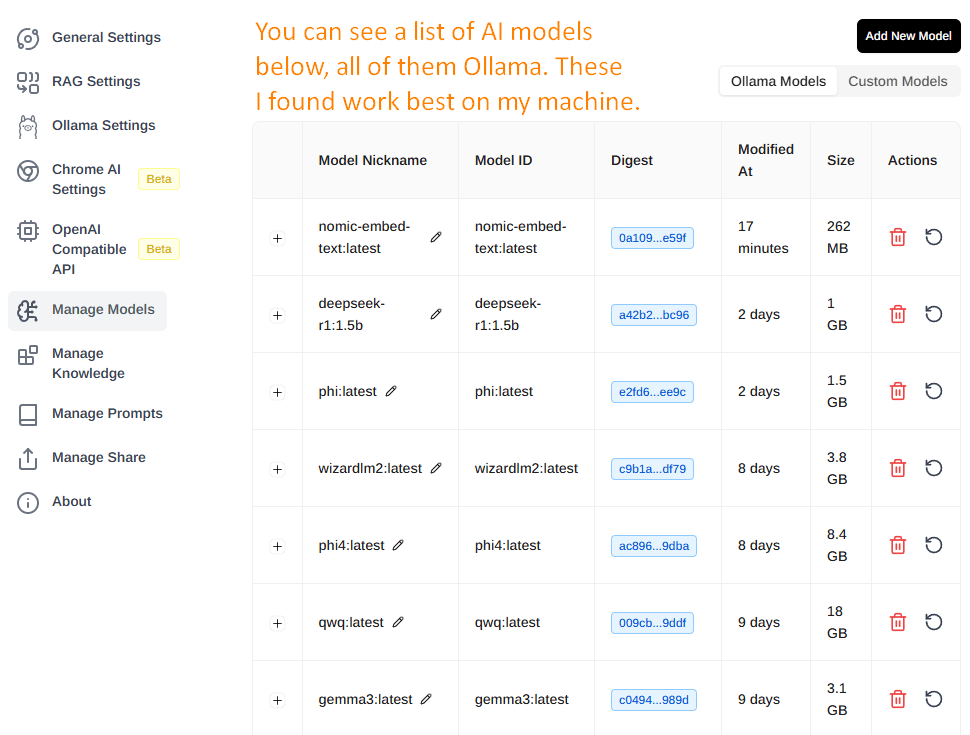

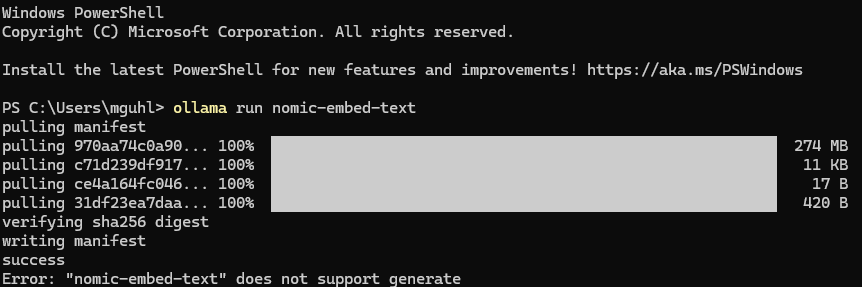

I did have to add one more item, nomic-embed-text, to Ollama for Retrieval-Augmented-Generation (RAG) feature, but that was pretty easy:

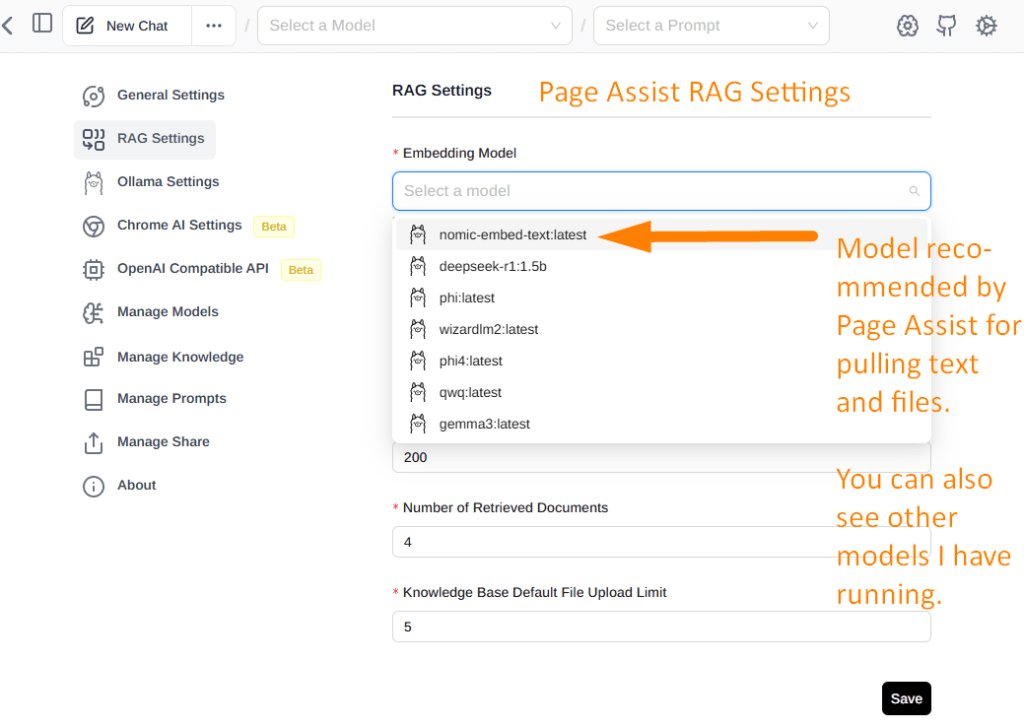

I probably should have typed, “ollama pull nomic-embed-text,” rather than run but hey, it still worked. You can see what this looks like in the Page Assist settings:

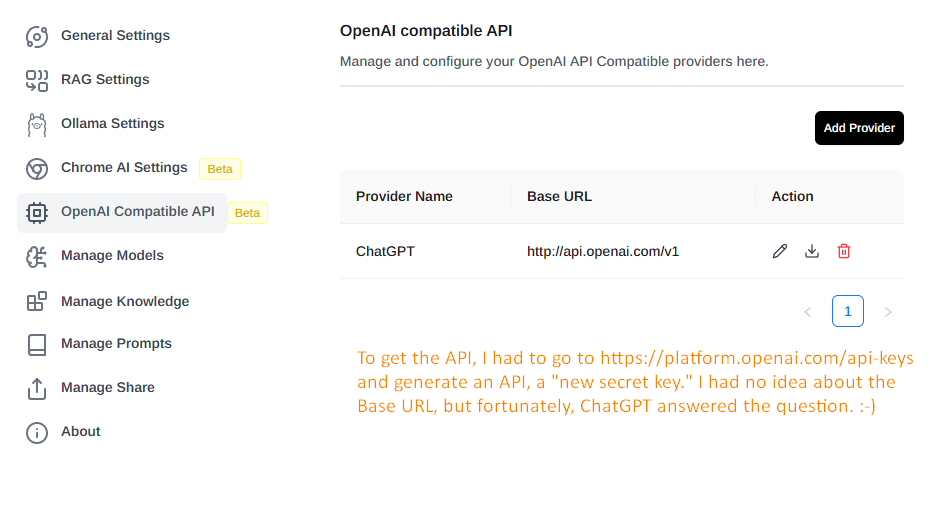

While I have a lot of models available, I thought it would be worth experimenting with ChatGPT account access via API:

I probably won’t use it all that much via API since there seems to be a cost associated, but nice to know how to do that! Here is a complete listing, which Page Assist makes it easy to view and manage:

Page Assist Knowledge Management

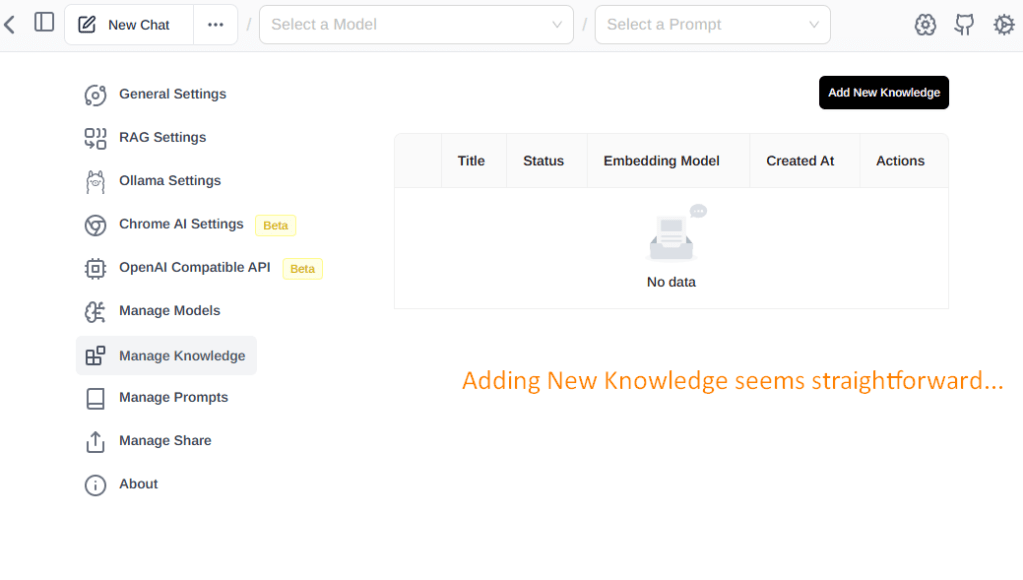

The part that interests me the most is Knowledge Stack/Bank Management:

So, let’s give it a shot:

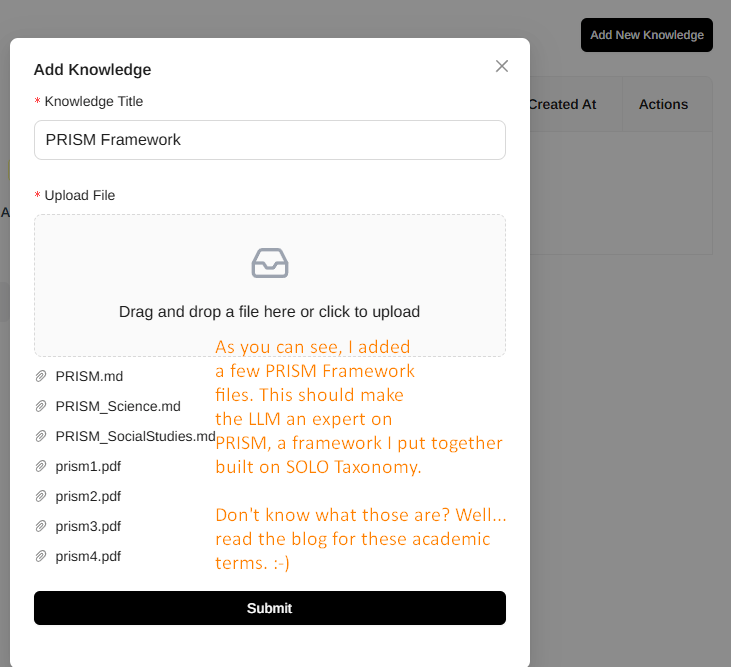

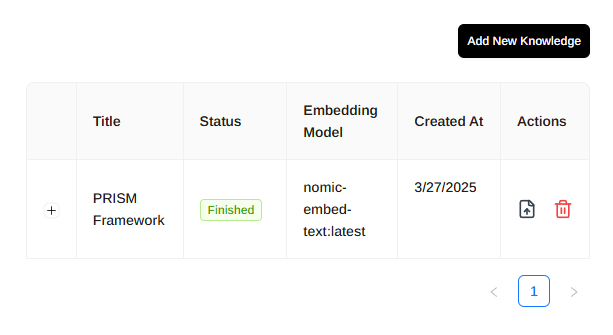

As you can see, I’ve attempted to add MarkDown (MD) text files and PDF files. It took less than a minute to add the files to the Knowledge Management:

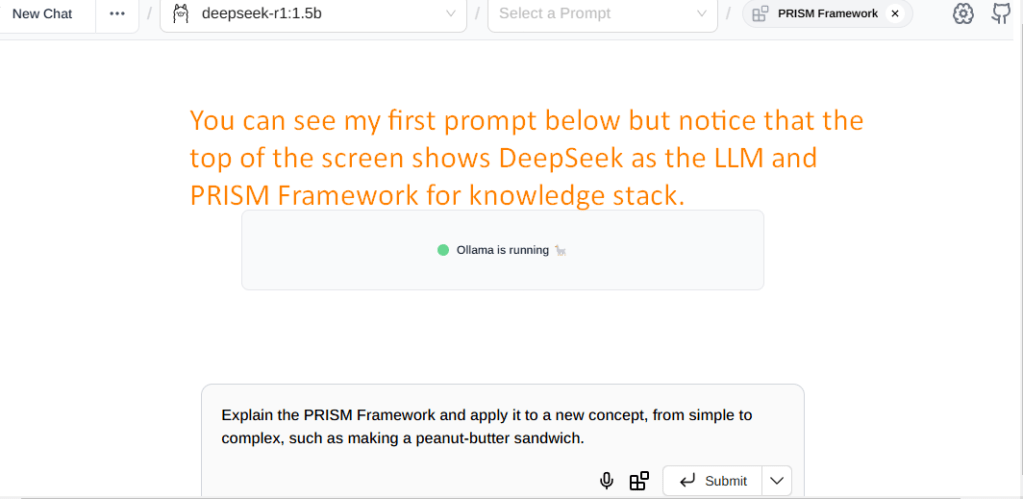

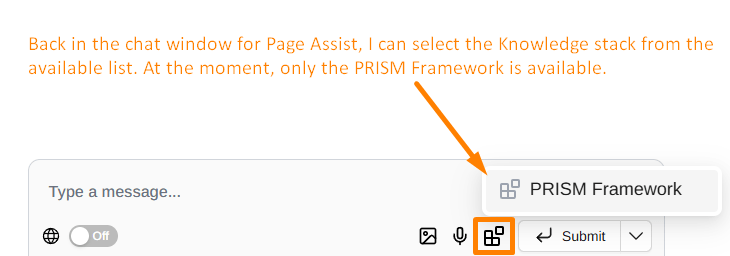

The question is, “How will this work with DeepSeek model running locally?”

I’m going to start with an easy prompt, simply an explanation and application of PRISM Framework to making a peanut-butter sandwich:

I’m almost afraid to look…will it work? Ok, the first attempt resulted in too much gobbledyblahblah from the AI as it substituted its own meaning. I decided to cut back the total number of sources in the Knowledge Stack (deleting them), leaving only a bare bones explanation of what PRISM is.

The result to my prompt was:

Alright, so I’m trying to understand how to apply the PRISM framework to make a peanut-butter sandwich. I remember hearing that PRISM stands for Patterns, Reasoning, Ideas, Situation, and Methods. Let me break this down step by step.First, I think about the Patterns part of PRISM. That means I need to identify the key elements involved in making a sandwich. For a peanut-butter sandwich, the main components are bread (for the two sides), peanut butter, mayonnaise, and any other ingredients like lettuce or tomatoes if adding them in moderation. So, the pattern here is that each sandwich requires these four things: two slices of bread, some peanut butter, mayo, maybe lettuce on one side.

Next, Reasoning comes into play. Once I have the basic pattern identified, I can reason out how to assemble it properly. Maybe I should start with the base, put the bread together first, then add the sandwich fillings like peanut butter and mayonnaise in between. I might also consider adding lettuce on one side for extra flavor.

For Ideas, I need to think about the different aspects of making a sandwich. Should I make it loud, messy, or clean? What if I wanted something really complicated with multiple layers or maybe some vegetables? The idea is just to have a plan and a clear picture in mind before starting the task.

Situation involves considering how all these ideas relate to each other and the overall goal of making a sandwich. So, I need to make sure that when I go through the steps I thought about earlier, they fit together logically and create something satisfying.

Lastly, Methods help me plan out the exact steps I need to follow. Maybe I can outline the process from start to finish, including how much bread, peanut butter, mayo, and lettuce or tomatoes I’ll need. Then, I can consider whether I have any ingredients at home or if I’d need to buy more.

Wait, am I missing anything? Did I cover all parts of PRISM? Patterns for identifying elements, Reasoning for assembling, Ideas for variety, Situation for relating ideas, and Methods for planning steps. Yeah, that seems comprehensive. Maybe in the next step, I can actually start building the sandwich with these concepts to see if it works as expected.

Applying the PRISM Framework to Make a Peanut-Butter Sandwich

- Patterns: Identify key elements of making a peanut-butter sandwich: two slices of bread (for sides), some peanut butter and mayonnaise, possibly lettuce or tomatoes for freshness, and any other optional ingredients.

- Reasoning: Plan the assembly process logically. Start with base materials like the bread, add sandwich fillings step by step, and consider adding additional toppings if desired to enhance flavor.

- Ideas: Think about how you want the sandwich to look and feel—be it loud, messy, or clean. Consider multiple layering options for complexity.

- Situation: Ensure that your ideas align with each other and aim for a satisfying end result. Use your plan to create a clear picture before starting the task.

- Methods: Outline steps from start to finish, calculating quantities needed based on your planning. Create a step-by-step guide using methods like “loud or messy” instructions.

Next Step: Use these concepts to build the sandwich, testing it with real ingredients to ensure effectiveness and enjoyment.

As nice as that way with DeepSeek, I decided to try Phi4 and other models. I got varying results. I won’t post them here, but let’s say that some worked better than others (Phi4 being one of those).

Models Appear to Work Faster

On a machine with one GPU, speed is of essence. I don’t know why, but Ollama’s models feel like they are faster and they work via a web user interface like Page Assist. Even though I might run the same model through Msty via Ollama, it doesn’t quite reason well.

I’ll have to decide if I really want to try another tool. Page Assist made everything so easy, I don’t see the value in trying something else at this time.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.

[…] next steps? Using this template and designing local AI (Ollama with Page Assist) and see if that improves […]

[…] Ollama with Page Assist […]

[…] Ollama with Page Assist […]