On Linked In, Zach Kinzler has had a fascinating exchange about the ethics of GenAI. It’s a thorny topic, and many would say, you’re guilty of original sin and the only way to expiate that sin is through non-use of AI. Those divine allusions aside, discussing ethics is tough work. I don’t pretend to be an expert on ethics, but I like the discussion.

Key Points

One of the points that Zach highlights is this observation from Cath Ellis:

We can’t truly call AI “ethical” or “responsible,” because these models were trained on people’s data without consent or transparency, and released without fully explaining what they’re built for.

Instead of pretending we’re using AI ethically, we should focus on using it effectively and appropriately to make the world better, while acknowledging the harms and wrongs that came with its creation.

Of course, I immediately had to dig a little deeper into this. After all, I’ll be facilitating a workshop On Ethics of AI Use later this year for a specific higher education audience. I want them to do something. This prompted me to start reflecting on the underpinnings of ethics at play in Cath Ellis‘ points.

Ethical Frameworks

Asking GenAI to work as a thought partner, it suggested these frameworks. I had stumbled on these earlier and noted them for my session, but found the refresher worthwhile. You might want to read this entire piece.

- Consequentialism: This suggests that the morality of an action is determined by its outcomes or consequences. One aspect of it is Utilitarianism. This argues that the most ethical choice is the one that produces the greatest good for the greatest number of people. Surprisingly, at dinner the other day, my son argued for Utilitarianism from the perspective of the Trolley Car Problem <eye-roll>. (Be careful, that link will take you to a game and you may never be the same again).

- Pragmatic Ethics: This framework evaluates actions and beliefs based on their practical application and success in solving problems. Instead of adhering to rigid, abstract principles, it focuses on what works in a given context.

- Deontological framework: This framework focuses on duties and rules. It might be used to argue against using GenAI because GenAI was created through an unethical act (e.g., using data without consent, which violates a rule), any use of that tool is inherently tainted and morally wrong, regardless of any good it might produce. (Source: Adapted from BoodleBox with Gemini 2.5 Pro)

These are all cool ideas, and I can easily see people taking the easy approach to GenAI.

GenAI: Good or Bad? Your response is YES/NO corresponding to your dash to escape the critical thinking involved. But organizations can’t do this without consequences (no, must not mention politics right now).

Given this conversation, what might a position statement on the use of Gen AI look like? Er, well, why not ask?

Position Statement on the Use of Generative AI

You can see this statement tries to do what Cath Ellis suggests in her point at the start of this blog entry. Is it perfect? No. But ain’t nothing perfect.

We recognize that Generative AI technologies were developed on a flawed foundation, often utilizing vast datasets without the explicit consent or full transparency of the original creators. To ignore this history is irresponsible. However, to refuse engagement with these powerful tools is to forfeit their immense potential for positive innovation, problem-solving, and human advancement.

Therefore, we commit to a framework of intentional and ethical application. Our approach is not to pretend AI is inherently “ethical,” but to ensure our use of it is. We will move forward by applying these tools with critical awareness of their origins, a clear and beneficial purpose, a commitment to responsible practices like verification and attribution, and a constant evaluation of their impact on our goals and values. We choose to use this imperfect tool to build a more equitable and effective future, while actively acknowledging the debts of its past.

With that position statement in mind, here are the principles explained in a framework and followed up by a rubric.

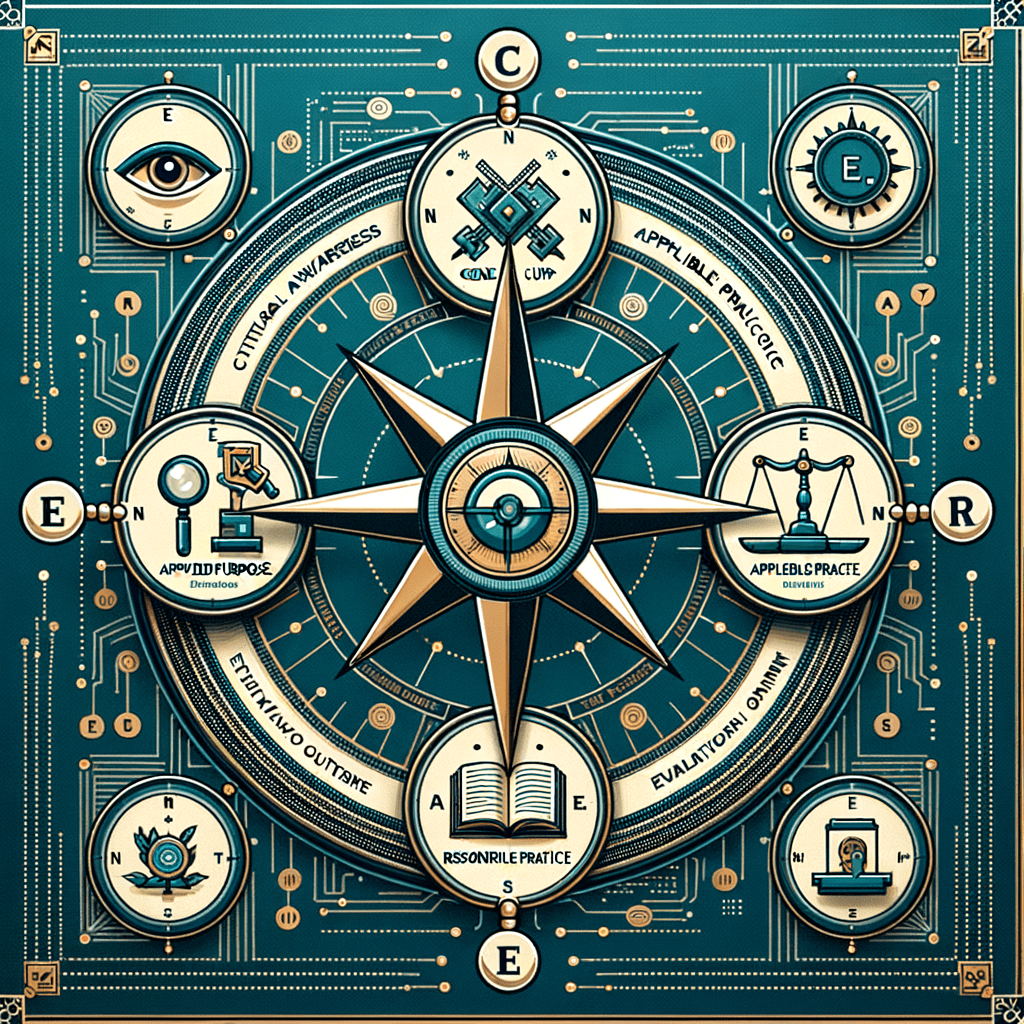

C.A.R.E. Framework

It’s easy to put together a framework. This one incorporates some of the ideas mentioned above.

C.A.R.E. Framework Explained (K-16 Examples)

| Letter & Principle | Explanation | K-16 Example |

|---|---|---|

| C – Critical Awareness | This is the foundational step of acknowledging the tool’s limitations and problematic origins. It means understanding that AI outputs can be biased, inaccurate, or incomplete. It involves maintaining a healthy skepticism and never treating the AI as an absolute authority. | A high school history teacher has students use an AI to summarize a historical event, but the core of the assignment is to then critique the AI’s summary for potential biases, missing perspectives, or factual errors, using primary source documents as a baseline. |

| A – Applied Purpose | This principle demands that we use AI intentionally and for a constructive reason. The goal should be to augment human capability, solve a specific problem, or increase efficiency for a meaningful task—not simply to replace human thought or effort. The question is always, “What good am I trying to achieve with this?” | A university biology student uses an AI to summarize a dense academic paper on gene editing. However, the assignment requires them to submit the AI’s summary alongside their own annotated version of the original paper. They must add comments that identify one key nuance the AI missed, one methodological detail the AI oversimplified, and then explain how the paper’s actual findings will inform their own experimental design. This approach uses the AI as a ‘first-pass’ reading guide but ensures the student engages critically with the source material, preventing cognitive offloading and promoting deeper comprehension. |

| R – Responsible Practice | This is about the how. It involves the practical ethics of using the tool. This includes citing the AI when used, fact-checking its outputs against reliable sources, protecting personal or sensitive data, and refusing to use it for plagiarism or to create misinformation. | A middle school English teacher creates a classroom policy where students can use AI to brainstorm ideas or create an outline for an essay, but they must submit their chat history along with their final paper to demonstrate how they used the tool as a thinking partner, not a ghostwriter. |

| E – Evaluative Outcome | This is the reflective step. After using the AI, we must assess the result. Did it help achieve the intended purpose? Did it create any unintended negative consequences? Did it deepen understanding or create a shortcut that hindered learning? This closes the loop and informs future use. | At the end of a semester, a college professor leads a discussion asking students to reflect on their use of AI tools. They discuss whether the tools made them better researchers and writers or if they became too reliant on them, evaluating the net impact on their learning objectives. |

C.A.R.E. Rubric

C.A.R.E. Framework Rubric

This rubric can be used to self-assess your use of an AI tool for a specific task or to evaluate a project completed with AI assistance. It helps move the focus from the tool itself to the quality and thoughtfulness of the human’s process.

| Principle | 1 – Beginning | 2 – Developing | 3 – Accomplished | 4 – Exemplary |

|---|---|---|---|---|

| C – Critical Awareness | Uses AI output as-is, treating it as a factual and unbiased source. | Acknowledges that AI can be inaccurate or biased but does not actively check for it. | Actively questions AI output, cross-referencing key facts with reliable external sources. | Systematically investigates AI output for specific biases (e.g., cultural, demographic) and documents the limitations of the AI’s perspective in the final work. |

| A – Applied Purpose | Uses AI without a clear goal, often for simple task replacement (e.g., “write this for me”). | Uses AI with a general goal, but the task does not necessarily require higher-order thinking. | Uses AI for a clearly defined purpose that augments human skill (e.g., brainstorming, summarizing research, overcoming writer’s block). | Uses AI for a novel or complex purpose that would be difficult or impossible without it, clearly articulating how the tool enabled a higher level of analysis or creativity. |

| R – Responsible Practice | Submits AI-generated content as their own without attribution. Does not consider data privacy. | Provides a basic citation that AI was used, but the process is not transparent. | Cites AI use clearly and fact-checks all outputs. Avoids inputting sensitive or personally identifiable information (PII) into the tool. | Documents and shares the AI interaction process (e.g., prompts used) to ensure transparency. Adheres to strict institutional data governance policies for all inputs. |

| E – Evaluative Outcome | Does not reflect on the use of the AI or the quality of the final output. | Reviews the final output for basic correctness and completion of the task. | Reflects on how using the AI impacted the quality of the work and their own learning process. | Conducts a systematic evaluation of the AI’s contribution, noting where it was most and least helpful, and uses these insights to refine future workflows and prompting strategies. |

I have a little more to add but will save that for the next blog entry.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.

[…] may recall I shared the CARE Framework for Gen AI Ethics. I thought I’d get my CARE bot to create a response to the email…what do you […]