I love this chart. It captures what I have been trying to say about using knowledge stacks+custom instructions, or what has been described as context engineering. Is it based on real data and evidence? Well….

The Prompt for the Chart

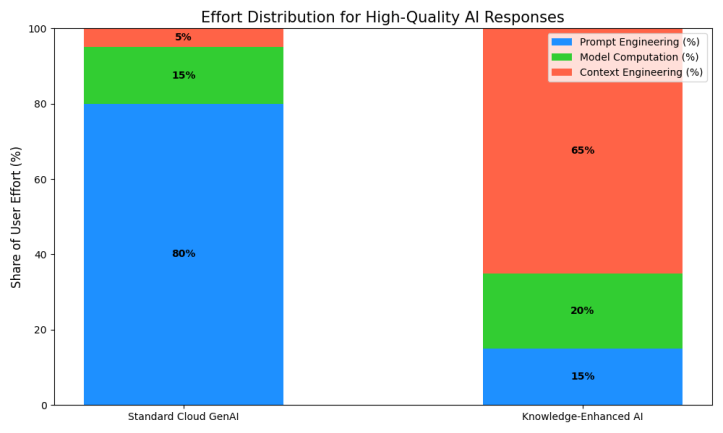

This chart would compare a “Standard Cloud GenAI” (using only the model’s built-in knowledge) with a “Knowledge-Enhanced AI” (like a custom GPT or BoodleBox Bot, using your uploaded files). The Y-axis would be “Share of User Effort (%)”.

Bar 1: Standard Cloud GenAI

- Prompt Engineering (80%): This slice would be massive. Without specific context, you bear the entire burden of writing a long, highly detailed, and perfectly crafted prompt. You have to include all background information, define terms, provide examples, and specify the exact format, hoping the AI understands your intent from the prompt alone.

- Model Computation (15%): This represents the AI’s processing work.

- Context Engineering (5%): This slice is tiny or non-existent. The effort here is limited to quickly pasting small snippets of text you might have on your clipboard.

Bar 2: Knowledge-Enhanced AI

- Context Engineering (65%): This is now the largest slice. Your primary effort shifts to identifying and providing the right documents, data, or other knowledge sources to the AI. You are curating the “ground truth” for the model to work from.

- Prompt Engineering (15%): This slice shrinks dramatically. Because the AI has the specific documents for context, your prompt can be much simpler and more direct (e.g., “Summarize the key findings from the attached report” instead of a multi-paragraph prompt explaining the report’s contents).

- Model Computation (20%): This slice might be slightly larger, as the model is now processing both your prompt and the provided knowledge files.

Explanation of Key Concepts

- Prompt Engineering: This is the skill of crafting the input (the prompt) you give to an AI. It involves choosing the right words, structure, and instructions to guide the model toward the desired output. In the standard model, it’s the most critical and labor-intensive part of the process.

- Context Engineering: This is the process of selecting and providing specific, relevant information (your “knowledge stack” or “knowledge bank”) to the AI along with your prompt. By uploading a file, you are engaging in context engineering. This grounds the AI’s response in your specific data, dramatically increasing accuracy and relevance while reducing the need for complex prompts.

In essence, using a knowledge-enhanced platform shifts the work from “prompt artist” to “information curator.” This shift generally leads to faster, more accurate, and more reliable results because you are controlling the source of truth.

The Chart

Explanation of Chart

Key Observations:

- Standard Cloud GenAI:

- Prompt Engineering: 80% of the effort is dedicated to crafting detailed prompts. This is because the AI relies solely on the information provided in the prompt.

- Model Computation: 15% of the effort is spent on the AI’s processing work.

- Context Engineering: Only 5% of the effort is required for context engineering, which involves pasting small snippets of text.

- Knowledge-Enhanced AI:

- Context Engineering: 65% of the effort is now focused on curating relevant documents, data, or other knowledge sources. This is because the AI has access to specific files for context.

- Prompt Engineering: This drops to 15%, as the prompts can be simpler and more direct.

- Model Computation: This increases slightly to 20%, as the model processes both the prompt and the provided knowledge files.

Explanation of Key Concepts:

- Prompt Engineering: This involves crafting the input (the prompt) you give to an AI. It’s the most critical and labor-intensive part of the process when using a standard model.

- Context Engineering: This is the process of selecting and providing specific, relevant information to the AI along with your prompt. By uploading a file in BoodleBox, you are engaging in context engineering. This grounds the AI’s response in your specific data, dramatically increasing accuracy and relevance while reducing the need for complex prompts.

In essence, using a knowledge-enhanced platform shifts the work from “prompt artist” to “information curator.” This shift generally leads to faster, more accurate, and more reliable results because you are controlling the source of truth.

How the Chart Was Created

The chart above was generated from the prompt using Codestral via BoodleBox. I was quite surprised at the quality and legibility. However, how did I get to the prompt? To get to the prompt, I took a chart on semiconductors, infrastructure, and applications, that compares cloud stack vs generative AI stack. Then I asked:

What would a chart look like but for the knowledge stack? Compare a knowledge stack/bank based AI to just a cloud GenAI for efficiency? Explain context engineering and prompt engineering as part of this. Be as accurate as possible.

Gemini 2.5 Pro via BoodleBox returned this response:

Of course. It’s a great question that gets to the heart of how to work more effectively with AI. While I can’t generate an image, I can describe what that chart would look like and explain the concepts behind it.

The original chart shows where the value (gross margin) is captured in the tech stack. A similar chart for efficiency would show where the effort is spent to get a high-quality result.

The rest of the response included the prompt for the chart at the top of this blog entry. I also spent some time trying to get ChatGPT o3 to create a chart, as well as a few other models, all in BoodleBox. Claude 4.0 Opus generated a Mermaid syntax formatted chart, which while accurate, was clunky compared to Codestral’s effort.

How Do You Know? We Don’t

One question that comes to mind is, “How do we know the information in the graph is accurate to actual data or evidence?” The answer is, “We do not. It’s a fabrication, a made-up chart that captures what I believe about standard Cloud AI where you copy-n-paste in the info and write a prompt vs custom instructions with knowledge stack.” But it looks good, huh?

| Aspect | Standard Cloud GenAI | Knowledge-Enhanced AI | Source |

|---|---|---|---|

| Prompt Engineering | Crafting precise instructions, iterative testing, and domain expertise (80% of the work) | Designing structured task schemas and roles | The Strategic Role of Prompt Engineering Prompt Engineering Overview and Guide |

| Context Engineering | Minimal effort (5% per chart) | Role-specific agent instructions, memory modules, validation agents, and multi-agent orchestration | The Strategic Role of Prompt Engineering |

| Effort Shift | Manual, trial-and-error task focused on single-turn interactions | System design, developing protocols, task schemas, and managing knowledge contexts | Prompt Engineering: An Emerging New Role in AI The Strategic Role of Prompt Engineering |

| Scalability | Limited, ad hoc | High, designed for scale and auditability | The Strategic Role of Prompt Engineering |

My Experience

My experience? Here’s where my experience from setting up over 20 of these and countless hours of experimentation, albeit not scientific:

- Custom instructions and Knowledge stacks eliminate random performance that you get with simply engaging in prompts and pasting in text and files.

- Context engineering yields better results than prompt engineering.

- While the percentages in the chart are arbitrary, not based on data, it would be possible to measure

So, in the end, this data and chart lack empirical rigor and transparency needed for strong scientific claims. As I suspect and will point out in my upcoming presentation, it’s simply a conceptual framework that I think is valuable for understanding AI interaction patterns…the graph is an illustration without supporting evidence.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.