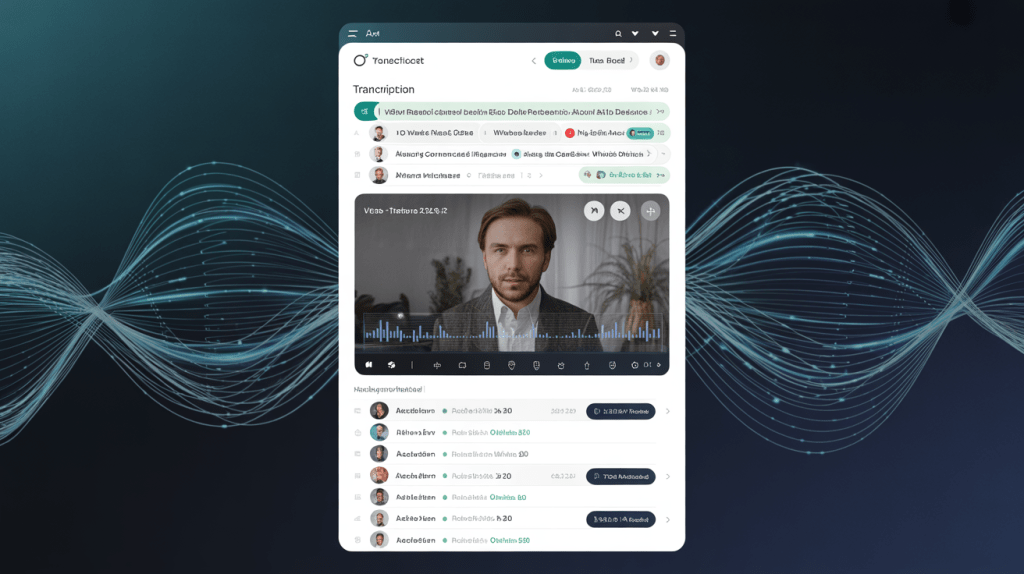

Cued up in my podcast feed is Zach Kinzler’s engaging interviews with folks focused on Gen AI. His podcast is Smarter Campus (see complete list of episodes here). While I have been trying to catch up on my podcast feed, there are always some amazing concepts that fly by while I’m driving or walking or washing dishes. For those podcasts, I export the audio from my Overcast app on my iPhone, then transcribe the audio with Whisper, and ask my Outline Helper Bot via BoodleBox to process it. My reflection appears in square brackets.

Smarter Campus Podcast: From Video Games to the Classroom: Michael Curtin on Translating AI for Higher Education

I. Introduction and Guest Background

“I grew up in a college town so that’s kind of cast realities very, very bizarre for me. I’m very, very used to a bunch of extraordinarily smart people doing extraordinarily smart things.”

- Host introduces Michael Curtin from University of Illinois Urbana-Champaign

- Michael’s unique background combining education and video game industry

- Experience with emerging technologies and systems thinking

- Positioned well when ChatGPT 3.5 launched due to existing momentum in emerging tech

II. Understanding Generative AI’s Nature and Limitations

“Generative AI is built different in the sense that that is not what it’s meant to do at all… it will deviate from that exact set of text because of the way it builds words.”

- People expect AI to act like traditional computer programs (factory robots)

- Generative AI is fundamentally different – built for creativity, not repetition

- Struggles with traditional technology integration (calendars, exact memorization)

- Marketing dollars creating unrealistic expectations about AI capabilities

III. AI in University Settings: Experts vs. Generalists

“A university setting is a place where there’s a knowledge center… hundreds of highly specialized people who have often a very narrow knowledge but extraordinarily deep.”

- Universities as knowledge centers with deep specialists

- Challenge: keeping human experts as authorities, not replaced by confident AI

- AI as iteration tool for bright people to test ideas

- Voice mode enabling closed-loop thinking without human listeners

[This idea that you can use a chatbot like OpenAI’s ChatGPT, and I hope in January, 2026, BoodleBox’ smartphone app, as a closed loop, a sounding board, someone to converse with is spot on. One of my favorite things to do in a workshop is to create a custom GPT, then have a conversation (if WiFi connection is good enough) about the content. It it a little like what you can do with NotebookLM’s interactive component. -Miguel]

IV. Educational Transformation and Faculty Adoption

“If you send someone a writing assignment, the expectation is that they write 100% of it themselves, that’s something that we can’t enforce anymore.”

- Traditional classroom-student relationships being upended

- No way to detect AI-generated content reliably

- Faculty stretched thin, making adaptation challenging

- Need for transparency about AI use in academic work

- Distinction between thinking WITH AI vs. using AI as outcome producer

[We have entered a time when it’s easy to produce GenAI content, but ensuring authenticity in the author/creator’s voice/style is all the more important. You can start from scratch and construct your creation yourself, or spend a lot of time editing, or some combination of that. But there is a time investment that’s not trivial. The key piece is that of thinking with AI, so long as it doesn’t replace your own thinking. I really like how Petersen and Magliozzi frame this in their book, AI in the Writing Workshop. -Miguel]

V. The Reality Crisis: AI’s Impact on Truth and Media

“I think all of AI is devaluing reality… The real world does not really exist on a computer anymore because of the ability for generative AI to just make stuff.”

- Sora and video generation creating convincing fake content

- Social media becoming disconnected from reality

- “AI Slop” contaminating reference materials for designers

- Emergence of AI-free spaces as human pushback

- Challenge of distinguishing real from generated content

[We are engaged in a massive, costly experiment. In time, this experiment will bear fruit in ways we can’t imagine or foresee. That grand experiment is composed of a million points of failure and success. Deciding which is success will fall upon the greedy folks shoving Gen AI down everyone’s throats, for good or ill. The rest of us will get incidental benefits from these technologies, like we do for smartphones and other technologies.

In the end, those may be quite significant. I can only hope that scientific inquiry remains free from fake content, that existing communities of inquiry are not dismantled in the overwhelming tsunami of AI workslop. As an individual, I have realized many benefits to creating content that becomes true by virtue of my saying, “This is an accurate probability, an accurate prediction of what could be.” That’s not something to be lightly discarded, at least, from my perspective. But it has to be balanced with the impact of these technologies on the world, a finite resource. -Miguel]

VI. Student Perspectives and Teaching Approaches

“Undergrads in my experience… view it as an entire threat to a lot of the things that they love about their life, including the arts and music.”

- Undergraduate students: frustrated, see AI as threat to creative fields

- Graduate students: more welcoming, closer to workforce needs

- Teaching focus on process over product

- AI jumps to finished products, missing iterative learning

- Importance of thumbnails and exploration in design

VII. Practical Advice for Educators

“The good news is that they are the smartest people in the room… That student knew the facts were wrong because she knows her stuff.”

- Example: Mechanical engineering student identifying AI errors

- Educators remain experts in their fields

- Need to identify granular uses for AI tools

- Ready to reject AI when inappropriate

- Expertise as protection against confident but wrong AI

VIII. Personal AI Use and Future Outlook

“There’s no point in building a sand castle on a beach because it’s going to get washed away. The important thing to do is to learn to surf.”

- Michael’s use: Voice conversations while jogging for story iteration

- AI as thinking partner, not content creator

- Avoiding sunk-cost fallacy through rapid iteration

- Future will “just get weirder”

- Focus on adaptability rather than fixed solutions

[There are ways to apply this to various audiences/roles, so I’m looking forward to exploring those.-Miguel]

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.