Working on a presentation, I wanted to share these levels of safety from a question I asked Google Gemini. This is research, so if you know better, feel free to drop a comment. I’ve gone in and tried to put my ideas for recommendations under each. At the end, you’ll find a “final hardware recommendation” for a machine that can run local AI with ease. I’m accepting donations now.

The Question

So rank these with a percentage from most safe for data to least:

- Local AI model, prob open source on your hardware

- Local AI accessing AI model like ChatGPT or Gemini via API

- Cloud API with data privacy protections connecting to variety of models via API

- ChatGPT Pro or Gemini Pro via app and/or website

Gemini’s Response

The response below ranks my scenarios from most safe for data privacy to least safe, with approximate percentage estimates for the level of control and privacy:

Most Safe for Data Privacy (95-100%)

- Local AI model, probably open source on your hardware (95-100%)

- Why: This is the gold standard for data privacy. Your data never leaves your local machine. You have complete control over the model, its inputs, and its outputs. There’s no third-party server involvement, no data transmission over the internet, and no risk of your data being used for training by external entities. The “probably open source” aspect further enhances transparency, as you can inspect the code to verify its data handling practices.

- Caveats: You are responsible for securing your own hardware and network.

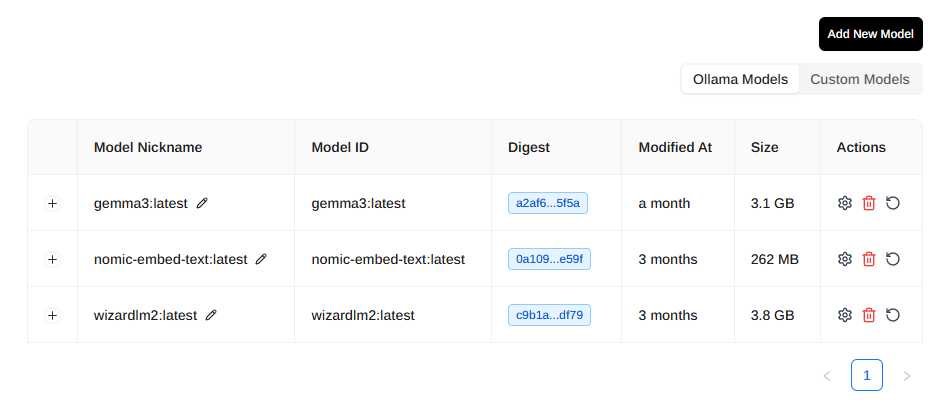

Miguel’s Thoughts: For me, after trying out several solutions like Msty, Sanctum, LM Studio, I’m going to probably go with Ollama and Page Assist for my session. I have to admit that Gemma 3 is running great on my one GPU machine. Here’s what that looks like:

Moderately Safe for Data Privacy (70-85%)

- Cloud API with data privacy protections connecting to a variety of models via API (70-85%)

- Why: This is similar to the previous point, but with the added complexity of “a variety of models.” If you’re using a platform that acts as an intermediary to multiple AI models, you need to understand the data privacy policies of all parties involved (the platform itself and each underlying model provider). While the platform might have good privacy policies, the specific model providers might have different terms. Reputable cloud providers often offer strong data processing agreements (DPAs) and compliance certifications (like SOC 2, GDPR, HIPAA compliance).

- Caveats: The “chain of custody” for your data is longer, increasing the number of potential points of vulnerability if any party in the chain has weaker security or privacy practices. It’s crucial to thoroughly vet all providers.

Miguel’s Thoughts: Ok, for this, I WAS using Perplexity AI, but decided that BoodleBox gives me access to a really nice Bot development environment. This is what I imagine BoodleBox is, a cloud AI service that provides protected access to AI models via API.

I am really enjoying the ability to create Bots in BoodleBox. Create to what purpose? Well, that’s the joy of discovery. For example, I created a Bot quickly (using the same Knowledge Stack) for both free users of BoodleBox and paid users of BoodleBox. In BoodleBox, the free users get unlimited access to free chatbots like ChatGPT 4o mini. Paid users, on the other hand, get Claude 3.7 (BoodleBox really prefers Claude since it comes as the default).

I was able to make this one for paid BoodleBot users quickly this evening, adapting from one I made for free. I’m using this for a workshop at some point, and I already created one that works really well in local AI (Ollama + Page Assist + Gemma 3). ChatGPT will be my next effort, but it’s pretty easy.

Least Safe for Data Privacy (50-70%)

- ChatGPT Pro or Gemini Pro via app and/or website (50-70%)

- Why: When you use these services directly through their consumer-facing apps or websites, your data is sent to the provider’s servers. While “Pro” versions often come with better data privacy policies than free tiers (e.g., opting out of data being used for model training by default), there are still considerations:

- Default Training: While you can usually opt out, for free or even some consumer-level paid tiers, data might be used for training unless you explicitly opt out.

- Human Review: Data sent through these interfaces might be subject to human review for abuse monitoring or service improvement, even if not for general model training.

- Data Retention: Conversation history is often retained by default for a period (e.g., 30 days or longer), even if you delete it from your front-end view.

- Broader Data Collection: Consumer apps and websites might collect more telemetry or usage data than a streamlined API endpoint.

- Caveats: This is the most convenient option for many users, but it inherently involves less control over your data’s lifecycle and greater reliance on the provider’s internal policies and security.

- Why: When you use these services directly through their consumer-facing apps or websites, your data is sent to the provider’s servers. While “Pro” versions often come with better data privacy policies than free tiers (e.g., opting out of data being used for model training by default), there are still considerations:

Miguel’s Thoughts: Since I use both the Pro versions of ChatGPT and Gemini, not to mention an occasional Mistral.ai or DeepSeek, it’s absolutely concerning. I’m really trying to make an effort to NOT spill confidential data of any sort into web-based or app-based AIs. I really wish our little smartphones were able to run AI local, but the best is probably cloud AI via API.

Important Considerations for All Tiers:

- User Behavior: No matter the technical setup, your actions are paramount. Never input highly sensitive or confidential information into any AI model unless you have explicit, ironclad guarantees and legal agreements regarding its handling, and even then, proceed with extreme caution.

- Policy Changes: Data privacy policies can change. It’s essential to stay informed about the terms of service for any AI service you use.

- Data Anonymization/Pseudonymization: For any cloud-based solution, consider anonymizing or pseudonymizing your data before sending it, if possible, to further reduce privacy risks.

Type of Hardware

Another question I have is what type of hardware is needed…

“Mid-Range Usage” for Local AI:

This typically implies running models up to 13B (billion) parameters comfortably, possibly with 4-bit quantization, or smaller models (7B) with larger context windows.

Larger models (e.g., 30B+) would likely require multiple high-end GPUs or professional-grade hardware, which goes beyond “mid-range.”

GPU VRAM is King:

For local LLMs, the most critical factor is the GPU’s VRAM (Video Random Access Memory).

More VRAM allows you to load larger models or run models with higher precision.

Recommendations are General:

Look for desktop PCs, as laptops with truly powerful GPUs for sustained AI workloads can be significantly more expensive and have thermal limitations.

- Open-Source Software: For local AI, you’d be looking at software like Ollama, LM Studio, or specific model implementations (e.g., Llama.cpp) that allow you to download and run models locally.

- “Mid-Range” Computer Cost: Expect to pay anywhere from $1000 – $2500+ for a desktop PC with a suitable mid-range GPU for local AI.

Comparison Table

| Scenario | Safety Rating (Data Privacy) | Mid-Range Local GPU Needs (VRAM) | Recommendation Link (Illustrative) |

|---|---|---|---|

| Local AI model, open source on your hardware | 95-100% | NVIDIA GeForce RTX 3060 (12GB VRAM), RTX 4060 Ti (16GB VRAM), or RTX 4070/4070 Ti (12-16GB VRAM). More VRAM is always better for larger models. Aim for at least 16GB RAM for the system, preferably 32GB or 64GB. A strong multi-core CPU (Intel i7/i9 or AMD Ryzen 7/9) is also beneficial. | Skytech Shiva Gaming PC (RTX 4070, i7-12700F, 16GB RAM) or similar with a good GPU. (Note: Search for “Gaming PC RTX 3060 12GB” or “Gaming PC RTX 4070 16GB” to find suitable options. Look for desktops with ample RAM and an SSD.) |

| Cloud API with data privacy protections | 70-85% | Minimal local GPU needs. Similar to the above, the processing occurs in the cloud. | Any modern laptop or desktop computer with a reliable internet connection. For example, a well-reviewed Acer Aspire 5 Laptop (this link is an example, choose based on current reviews and specs). |

| ChatGPT Pro or Gemini Pro via app and/or website | 50-70% | Minimal local GPU needs. Your browser or app handles the interface, and the AI runs entirely on the provider’s cloud. | Any device capable of running a modern web browser, including smartphones, tablets, or basic laptops. For example, a Chromebook (this link is an example, choose based on current reviews and specs). |

More Recommendations

The following are Claude 4 Opus’ recommendations. Now if I only had $3500 to spend on hardware (I don’t). But still, it’s nice to know what’s possible.

Based on your needs for AI workloads and gaming with a 4-year lifespan, here’s my final recommendation with full upgrades:

Ultimate AI/Gaming Build for Longevity (~$3,500)

Core Components

GPU: NVIDIA RTX 4080 SUPER (16GB) – $1,050

- Future-proof 16GB VRAM

- Handles 30B+ models with quantization

- Where: Newegg, Best Buy, B&H Photo

CPU: AMD Ryzen 9 7900X – $400

- 12 cores/24 threads for parallel processing

- Where: Amazon, Newegg, Micro Center

Motherboard: ASUS TUF Gaming X670E-Plus – $280

- PCIe 5.0 for future upgrades

- Excellent VRM cooling

- Where: Amazon, Newegg

RAM: 64GB DDR5-6000 (2x32GB) – $220

- Corsair Vengeance or G.Skill Trident Z5

- Essential for larger AI models

- Where: Amazon, Newegg

Storage:

- Primary: 2TB Samsung 990 Pro NVMe – $150

- AI Models: 2TB WD Black SN850X – $130

- Where: Amazon, Best Buy, Newegg

PSU: Corsair RM1000x 80+ Gold – $170

- Headroom for future upgrades

- 10-year warranty

- Where: Amazon, Corsair.com

Case: Fractal Design Torrent – $190

- Best-in-class airflow

- Spacious for upgrades

- Where: Amazon, Newegg

CPU Cooler: Arctic Liquid Freezer II 360 – $110

- Excellent cooling for sustained loads

- Where: Amazon, Arctic Direct

Total Cost: ~$3,500

Where to Buy (Best Options)

Complete System Builders

- NZXT BLD – Custom configuration with warranty

- Redux PC – Competitive pricing, good support

- iBuyPower – Often has sales/bundles

Component Retailers

- Micro Center – Best in-store deals (if nearby)

- Newegg – Combo deals, wide selection

- Amazon – Fast shipping, easy returns

- B&H Photo – No tax in some states

Why This Build Will Last 4 Years

- 16GB VRAM – Sufficient for AI models through 2028

- 64GB RAM – Handles current and future model requirements

- PCIe 5.0 – Ready for next-gen GPUs/storage

- 12-core CPU – Balanced for AI preprocessing and gaming

- Quality PSU – Reliable power for system longevity

Performance Expectations

AI Capabilities (with Ollama)

- 7B-13B models: Native full precision

- 30B models: Smooth with 4-bit quantization

- 70B models: Usable with aggressive quantization

- Multiple models: Can run 2-3 smaller models simultaneously

Gaming (2024-2028)

- 1440p: 144+ FPS Ultra settings

- 4K: 80+ FPS High settings

- VR: Fully capable

- Ray Tracing: Excellent performance

Money-Saving Tips

- Buy during sales: Black Friday, Prime Day

- Check r/buildapcsales: Daily deals

- Consider open-box: At Micro Center/Newegg

- Bundle deals: Motherboard + CPU combos

Optional Future Upgrades

- Year 2: Add 64GB more RAM (128GB total)

- Year 3: Add 4TB NVMe for model storage

- Year 4: Consider GPU upgrade if needed

This configuration provides excellent headroom for both current AI workloads and future developments, while maintaining top-tier gaming performance throughout its lifespan.

Discover more from Another Think Coming

Subscribe to get the latest posts sent to your email.

[…] my focus is on Gemma3 as run through Ollama with Page Assist (a browser extension). It’s a data privacy (important!) friendly. What’s more, it is sandboxed from the Web (if you want it to be) and no data ever leaves your […]

[…] you want to safeguard your data, you may want to read this blog entry first. The Custom AI Bot solutions below offer differing levels of data […]